- Run date – April 15, 2023

- We used the configuration below from Google cloud for this benchmarking in zone us-west4-a to see if disk throughput scales with multiple disks on single instance.

- One n2-standard-32 with Debian 11

- Four 500GB extreme persistent disk with 60000 IOPS configuration, no snapshot

- No optimization at OS level

- Note that there is a throughput limit per instance(depends on type) and per extreme PD (depends on size). Still we thought of giving it a try to see how it scales/performs with smaller block sizes and effects on latencies. This can help someone who want to use multiple disks on single instance.

- Treat this as our review of extreme persistent disk performance scaling on single instance with the configuration above for different block sizes.

- Used direct IO and/or sync to avoid cache.

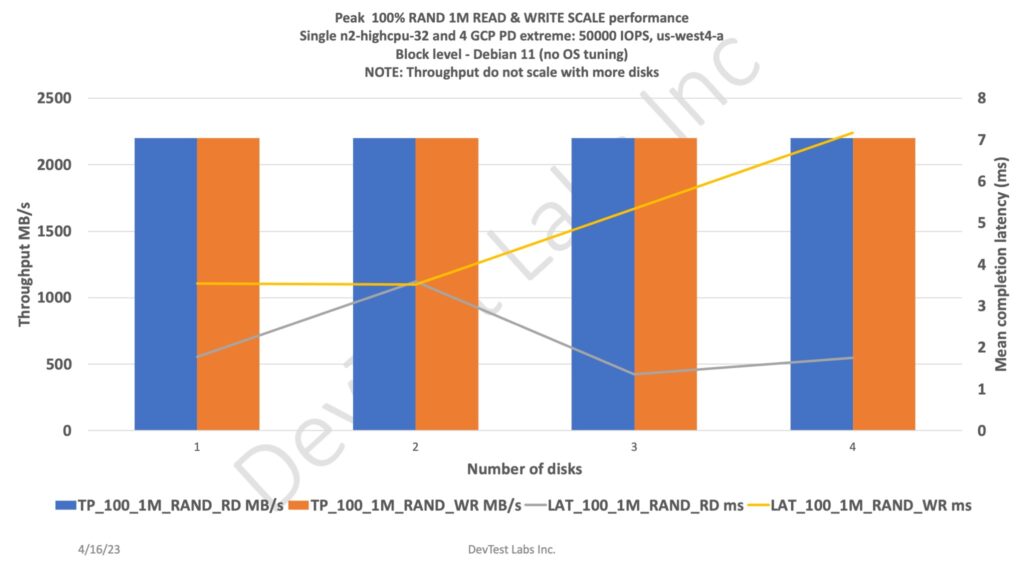

- Picked the best possible peak throughput numbers and corresponding mean completion latencies to achieve the respective throughputs.

- Whether an application can fully utilize the bandwidth (The advertised “up to” number) really depends on which block size, read/write ratio, sequential or random access workload, that application is using.

- We can help identify which storage system is optimal for your application in terms of performance.

- Few observations:

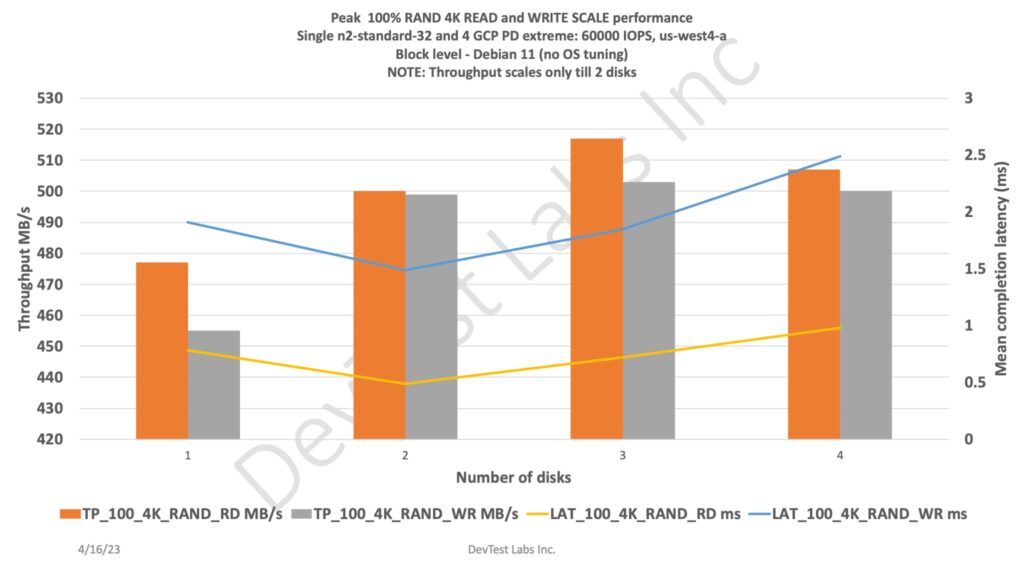

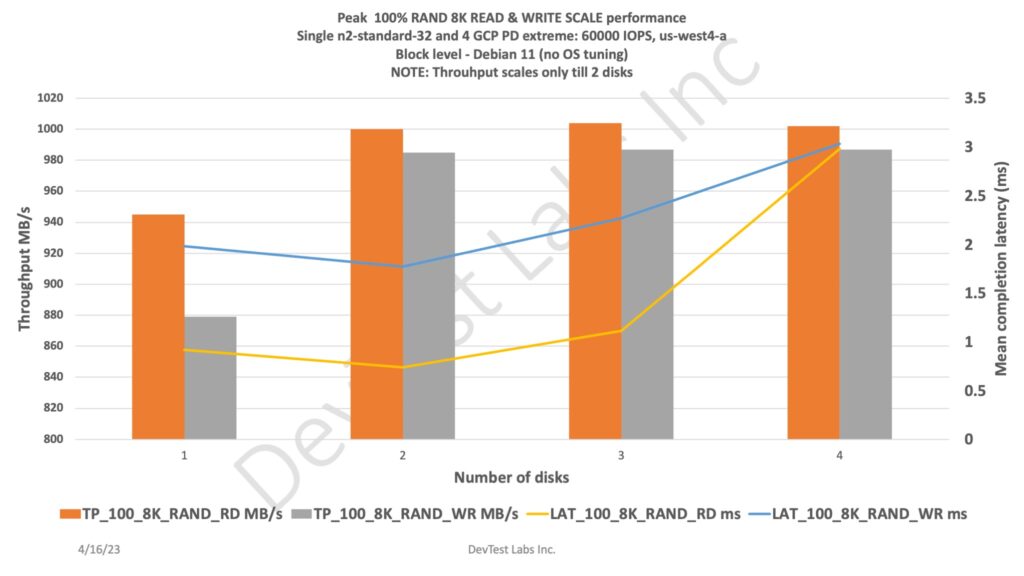

- We found scaling only with 4K and 8K block size workloads and only with 2-3 disks, adding 4th disk do not help. I think we are bumping into per instance storage IOPS limit.

- We will soon try the same test with multiple instances and 1 PD per instance and see if that scales.

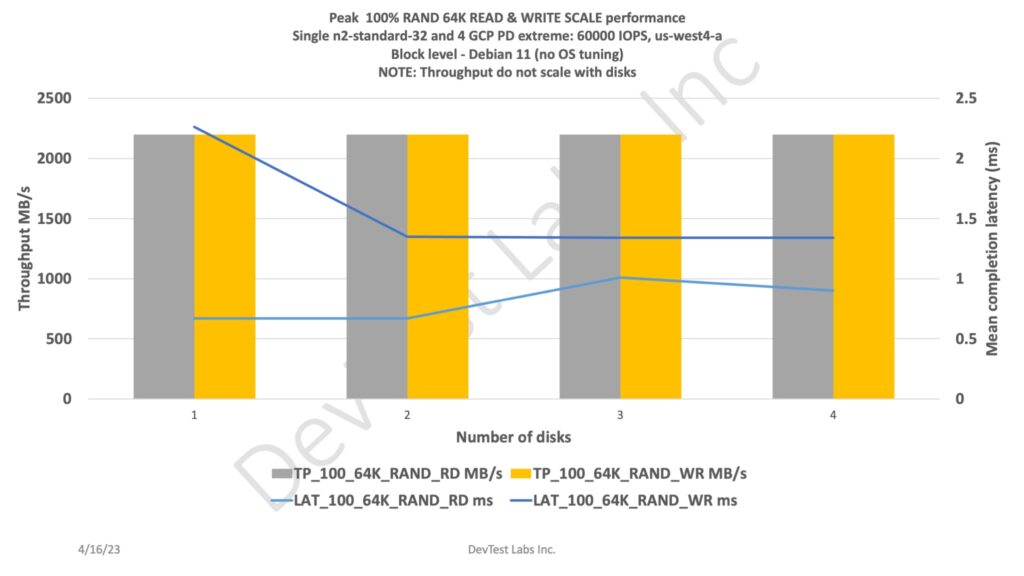

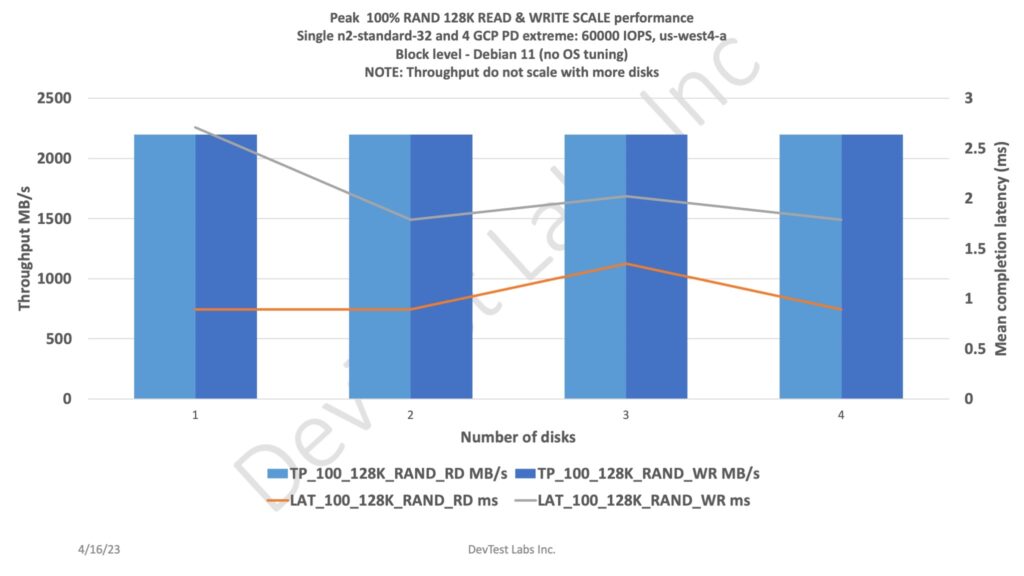

- For higher block sizes there is no scale in performance. Performance same as single disk performance. Again, this could be due to per instance limitation.

- Refer to below charts for results.

- If you are interested, use with caution as ballpark performance numbers could vary depending on multiple factors (current cloud usage in that zone, physical backing store used, instance type used, etc). These are the numbers we got in our run. We are not liable for any loss or damages caused by using this data.